An AI-Powered App Raises the Risk of Supply Chain Attacks by Exposing User Data

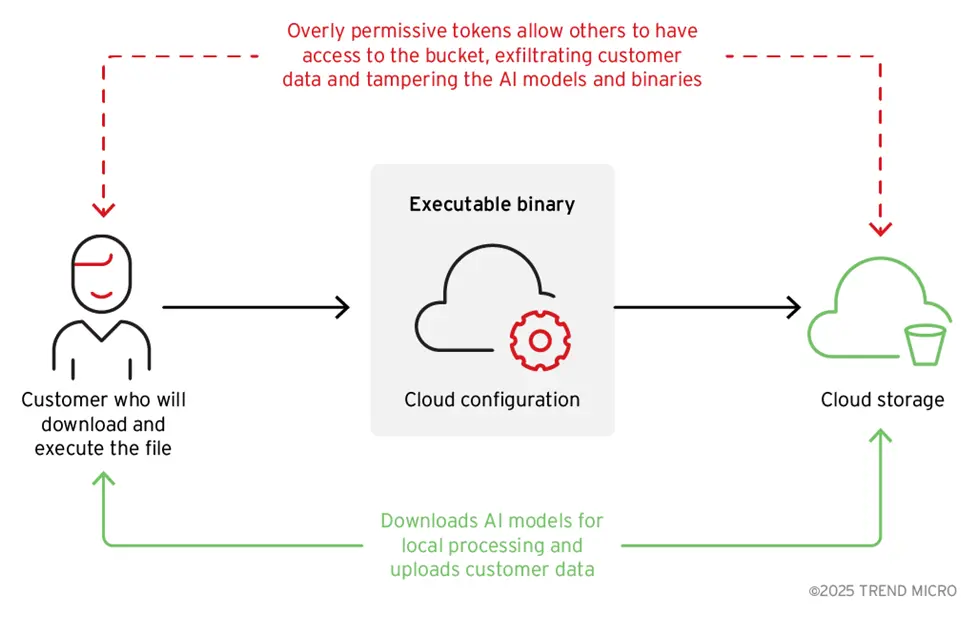

- Wondershare Repair is an AI-powered tool for improving photos and videos. It might have unintentionally violated its privacy policy by gathering and keeping private user images. The application’s code contained too lenient cloud access tokens due to poor Development, Security, and Operations (DevSecOps) procedures.

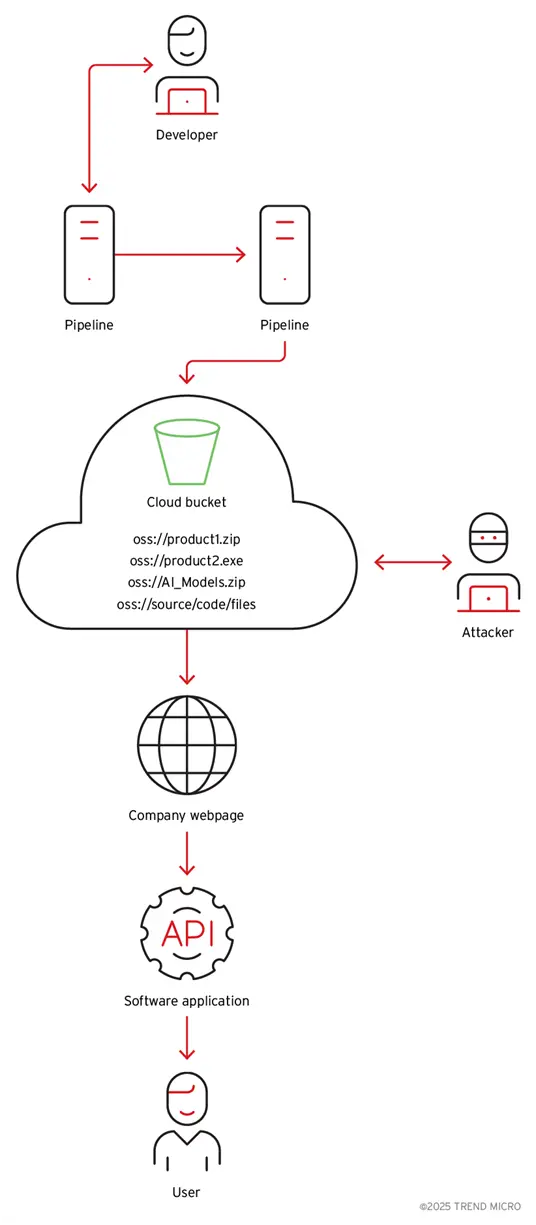

- Both read and write access to private cloud storage were made possible by the application’s binary’s hardcoded cloud credentials. In addition to customer data, the exposed cloud storage included software binaries, container images, AI models, and company source code.

- Attackers can carry out complex supply chain attacks by manipulating executable files or AI models using the compromised access. Such an attack may leverage vendor-signed software updates or AI model downloads to spread malicious payloads to authorized users.

Organizations in the AI era must take into account two crucial security factors: the integrity of AI model deployment and the consistency between the company’s privacy policy and real data handling procedures, especially with AI-powered applications. In one instance, WondershareRepairIt, an AI photo editing program, collected, stored, and unintentionally leaked private user data owing to inadequate Development, Security, and Operations (DevSecOps) procedures, in violation of the company’s privacy policy, according to a famous research from a dignified organization.

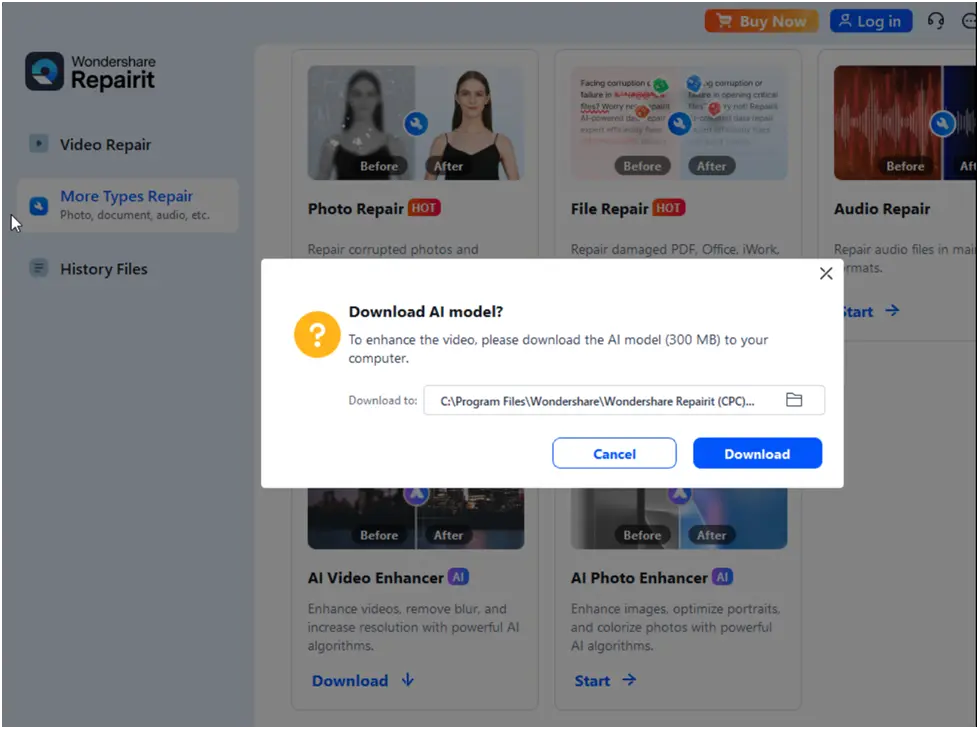

As seen in Figure 1, the program makes it clear that user data will not be saved. This is also stated on their website. However, due to security lapses, we noticed that private user images were saved and then made public.

According to our investigation, the application’s source code contained an excessively permissive cloud access token as a result of subpar DevSecOps procedures. Sensitive data kept in the cloud storage bucket was made public by this token. Additionally, because the data was not encrypted, anyone with even rudimentary technical expertise could access it, download it, and use it against the company.

As we have seen in earlier studies, developers frequently disregard security guidelines and incorporate their excessively liberal cloud credentials straight into the code.

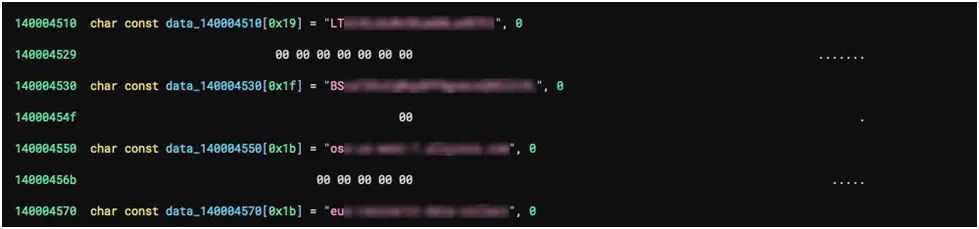

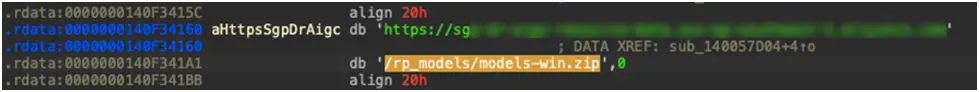

In our instance, the produced binary executable contained the credentials (Figure 2). Although this method may appear practical, streamlining backend operations and user experience, if it is not executed properly, it puts the company at serious risk.

It is essential to secure the entire architecture: It is possible to avoid catastrophic situations where attackers download the compiled binaries, examine them, and use them for purposes unrelated to their intended functionality by clearly defining storage purposes, establishing access controls, and making sure credentials are only given the permissions they need.

Although hardcoding a cloud storage access token with write permissions straight into the binaries is a typical approach, most implementations severely restrict token permissions, which is why it is utilized for application logs or metric gathering. Data can be written to cloud storage, for instance, but it cannot be recovered.

Figure 2. Showcasing the poor implementation of cloud storage use

The source of the susceptible code snippet is unknown; it may have been an AI coding bot or a person. Regardless, companies should be especially careful while using cloud services. For instance, a single access token breach can enable threat actors to introduce malicious code into distributed software binaries, which could start a supply chain attack. These kinds of leaks frequently have catastrophic results.

As of right now, we have not heard anything from the vendor despite our proactive attempts to reach them. The vendor was informed of these vulnerabilities in April. Before going live, the vendor was also given access to the blog post’s final draft.

There was the highly renowned source of the first vulnerability disclosure. On September 17, the vulnerabilities were revealed and given the CVE-2025-10643 and CVE-2025-10644 designations.

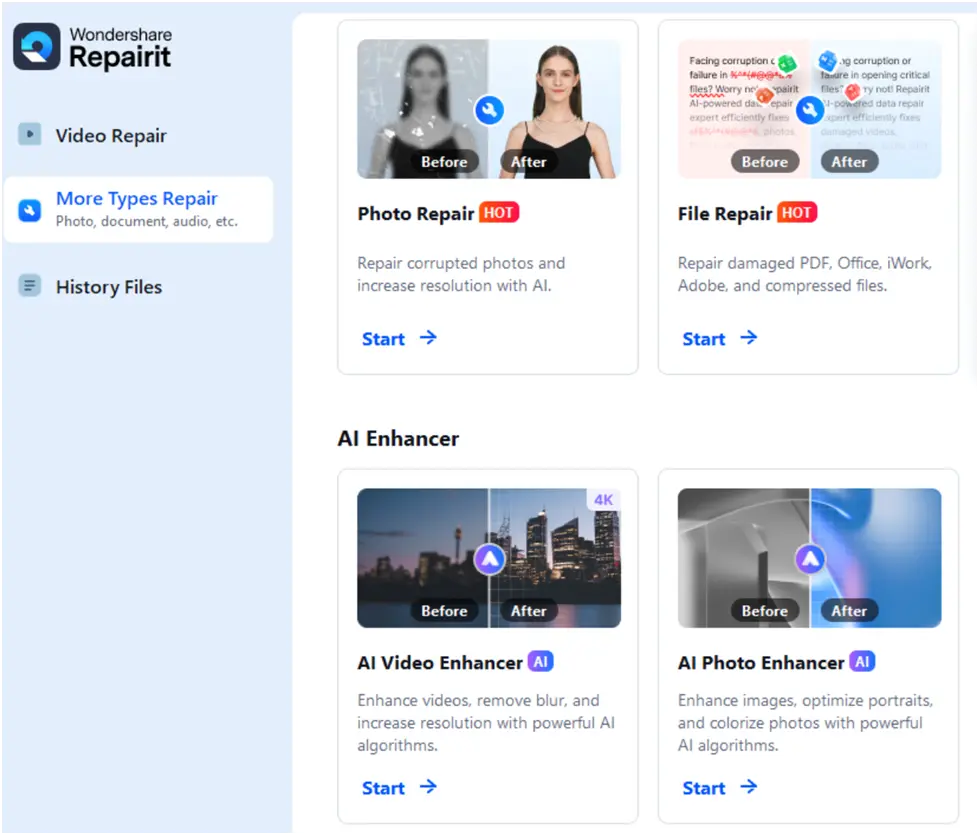

Binary analysis: Credential exposure and data leakage

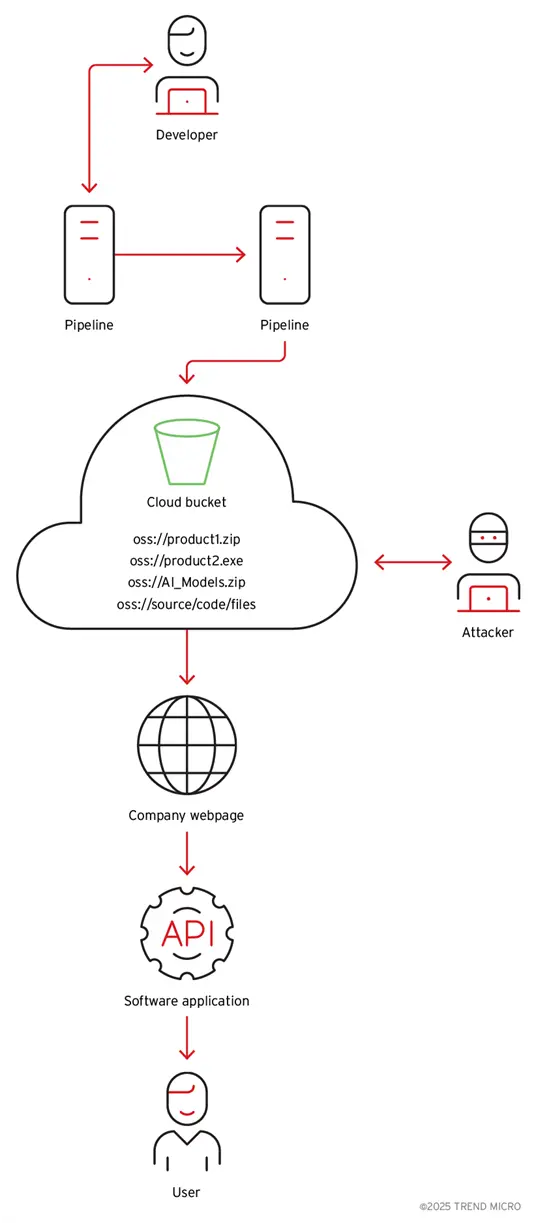

The downloaded binary, a client application that was heavily advertised on the business’s official website as a powerful, easy-to-use tool for fixing damaged photos and movies using patented methodologies and artificial intelligence as a core engine, was where the discovery started (Figure 3).

Figure 3. WondershareRepairit highlights and advertisement

The binary analysis showed the application uses a cloud storage account with hardcoded credentials. The storage account was not only used to download AI models and application data; we found that the account also contained multiple signed application executables developed by the company. It also had sensitive customer data (Figure 4), all accessible due to the cloud object storage identifiers (URLs and API endpoints), a secret access ID and key, and defined bucket names present in the binary.

Figure 4. Binary analysis showing the cloud ID, secret, address, and bucket name

The credentials that were given read and write access to the bucket were also hardcoded in the malware, according to additional investigation. AI models, container images, binaries for other products from the same company, scripts and source code, and customer data (like images and videos) are all stored in the same cloud storage.

Figure 5. Diagram on how the binary with cloud access is distributed to the user

Private data exposure: The first critical issue

We discovered that the customer uploaded data from two years before this study was stored by the insecure storage service, which raises serious privacy issues and regulatory ramifications, especially under the General Data Protection Regulation (GDPR) of the European Union (EU), the Health Insurance Portability and Accountability Act (HIPAA) of the United States, or comparable frameworks. Thousands of sensitive, unencrypted customer-uploaded personal photos meant for AI-driven augmentation were among the thousands of data leaks (Figure 6).

Figure 6. WondershareRepairit’s initial screen provides its tools

In addition to the immediate risk of regulatory penalties, harm to one’s reputation, and loss of competitive advantage as a result of intellectual property theft, the disclosure of such data also gives threat actors the opportunity to launch, perhaps, a targeted attack against the business and its clients.

The supply chain issue: Manipulating AI models

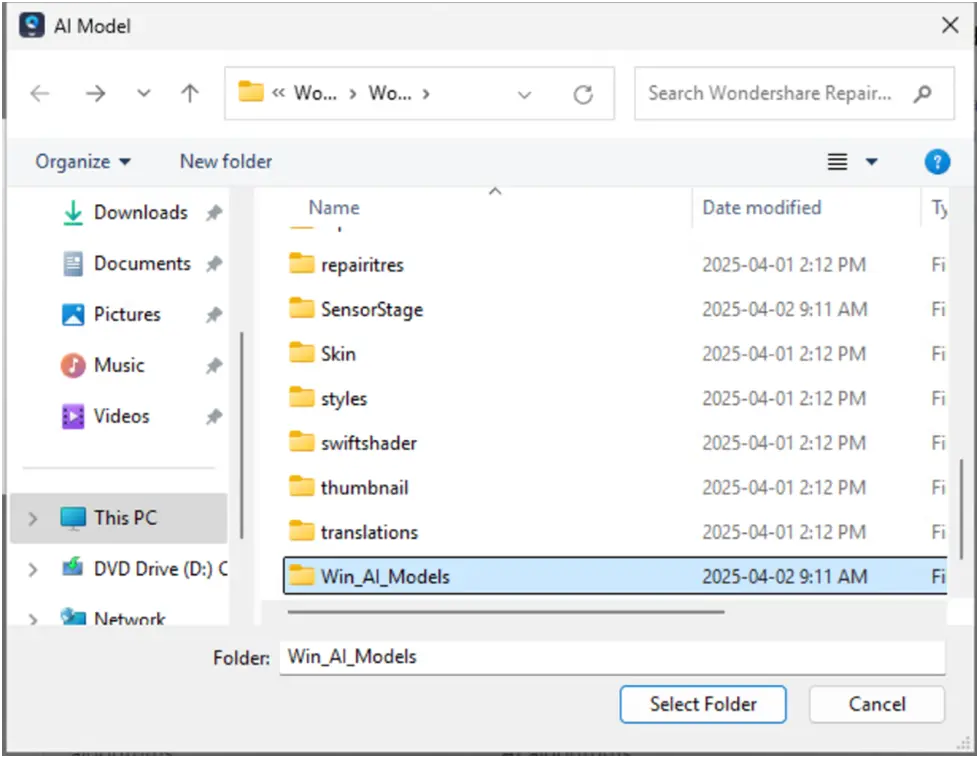

A pop-up window asking users to download AI models straight from the cloud storage bucket in order to enable local services appears when they interact with WondershareRepairit (Figure 7). The name of the AI model zip file to be downloaded and the precise bucket address are entered into the program (Figure 8).

The risk of a sophisticated AI supply chain attack is possibly even more worrisome than the mere disclosure of customer data. As in other situations we discussed previously, attackers might alter these models or their parameters and unintentionally infect users because the binary automatically obtains and runs AI models from the insecure cloud storage (Figure 9).

This creates the possibility of many assault execution scenarios where malevolent individuals could:

- In the cloud storage, swap out authentic AI models or configuration files.

- Alter software executables and target its clients with supply chain attacks.

- In order to create persistent backdoors, run arbitrary code, or steal additional client data covertly, compromise models.

Figure 7. WondershareRepairit pop-up asking the user to download the AI models from the compromised bucket for running the services locally

Figure 8. Bucket address and AI model zip name from the bucket to be downloaded by the binary

Figure 9. An attack flow in which an attacker finds the bucket credentials and replaces the contents with malicious files and AI models

Real-World Impact and Severity

It is impossible to exaggerate how serious such a situation is, since a supply chain attack of this kind might affect a lot of users all over the world by using legitimate vendor-signed binaries to deliver malicious payloads.

Historical Precedent and Lessons

Events like the ASUS ShadowHammer attack and the SolarWinds Orion attack highlight the disastrous potential of corrupted binaries sent over authorized supply chain routes. This situation involves Wondershare Repair. The hazards are the same, but they are heightened by the pervasive use of locally run AI models (Figures 10 and 11).

Figure 10. AI models from the compromised bucket were saved locally

Broader implications: Beyond data breaches and AI attacks

In addition to the direct exposure of consumer data and the manipulation of AI models, a number of other significant concerns become apparent:

- Intellectual property theft. The company’s market dominance and financial edge may be seriously harmed if rivals were able to reverse-engineer sophisticated algorithms using proprietary models and source code.

- Legal and Regulatory implications. Customer data exposure might result in hefty fines, legal action, and required disclosures under GDPR and other privacy regulations, seriously undermining confidence and financial stability. A recent high-profile example that exemplifies these risks was TikTok, which was fined €530 million by the EU in May for violating data privacy laws.

- Loss of customer confidence. Security lapses significantly damage customer trust. Although trust is difficult to gain, it can be easily lost, which might have a significant long-term economic impact and result in widespread consumer attrition.

- Consequences for insurance and vendor liability. Such violations have operational and financial ramifications in addition to direct fines. Financial damages are greatly increased by insurance claims, lost vendor agreements, and subsequent vendor blacklisting.

The Bottom Line

An organization’s haste to bring new features to market and stay competitive is fueled by the desire for ongoing innovation, yet they may not anticipate the novel, undiscovered applications for these features or how their usefulness may evolve over time. This illustrates how significant security consequences could be disregarded. For this reason, it is essential to establish a robust security strategy across the CD/CI pipeline and the entire enterprise.

In addition to preserving consumer confidence in AI-powered solutions, transparency about data usage and processing methods is essential for regulatory compliance. Businesses must make sure that their real operations are in line with their published privacy declarations in order to close the gap between policy and practice. To stay up with the threats associated with the deployment and development of AI, security protocols must be continuously reviewed and improved. The only way for enterprises to protect their proprietary technologies and consumer trust is through strict governance and security-by-design standards.

About The Author:

YogeshNaager is a content marketer who specializes in the cybersecurity and B2B space. Besides writing for the News4Hackers blogs, he also writes for brands including Craw Security, Bytecode Security, and NASSCOM.

Read More:

Fake Stock Trading Scam Made Bengaluru Techie Lose ₹44 Lakhs via Medical Emergency Message