Microsoft Finds a “Whisper Leak” Attack That Detects AI Chat Topics in Encrypted Traffic

Microsoft Finds a “Whisper Leak” Attack That Detects AI Chat Topics in Encrypted Traffic

Microsoft has revealed information regarding a new side-channel attack against remote language models that, in some situations, might provide a passive adversary with the ability to monitor network traffic to extract information about model conversation subjects despite encryption measures.

The company pointed out that the privacy of user and business communications could be seriously jeopardized by this leakage of data exchanged between humans and streaming-mode language models. Whisper Leak is the code name for the attack.

According to security researchers Jonathan Bar Or and Geoff McDonald of the Microsoft Defender Security Research Team, “Cyber attackers in a position to observe the encrypted traffic (for example, a nation-state actor at the internet service provider layer, someone on the local network, or someone connected to the same Wi-Fi router) could use this cyber attack to infer if the user’s prompt is on a specific topic.”

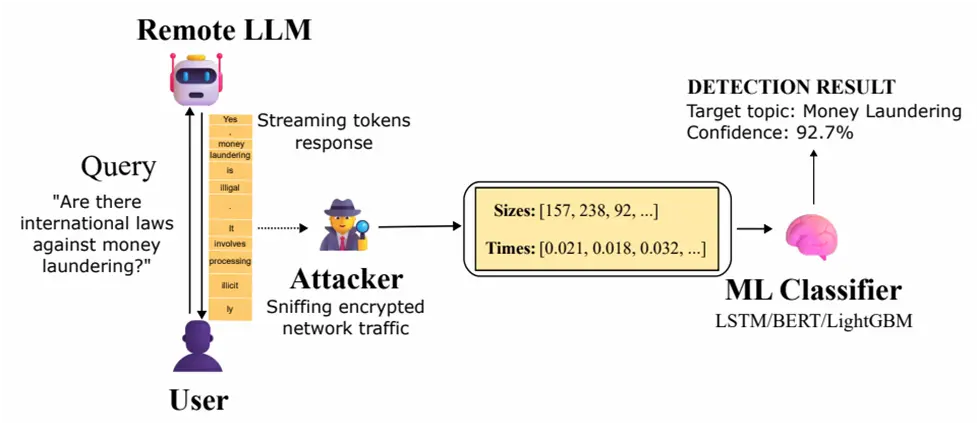

In other words, the technique enables an attacker to watch encrypted TLS communication between a user and LLM service, extract timing sequences and packet sizes, and apply trained classifiers to determine whether the topic of the chat falls into a sensitive target category.

Instead of waiting for the complete output to be computed, model streaming in large language models (LLMs) enables incremental data receiving as the model develops replies. It’s an important feedback system since, depending on how difficult the prompt or assignment is, some responses may take a while.

Microsoft’s most recent method is noteworthy, in part because it functions even though talks with artificial intelligence (AI) chatbots are encrypted using HTTPS, which guarantees that the exchange’s contents remain safe and cannot be altered.

Recent years have seen the development of numerous side-channel attacks against LLMs, such as the capacity to deduce the length of individual plaintext tokens from the size of encrypted packets in streaming model responses or the use of timing discrepancies brought on by caching LLM inferences to carry out input theft (also known as InputSnatch).

Building on these discoveries, Whisper Leak investigates whether “the sequence of encrypted packet sizes and inter-arrival times during a streaming language model response contains enough information to classify the topic of the initial prompt, even in the cases where responses are streamed in groupings of tokens,” according to Microsoft.

The Windows manufacturer claimed that in order to test this theory, it used three distinct machine learning models—LightGBM, Bi-LSTM, and BERT—to train a binary classifier as a proof-of-concept that can distinguish between a certain topic prompt and the rest (i.e., noise)

As a result, it has been discovered that numerous models from Mistral, xAI, DeepSeek, and OpenAI get scores above 98%. This means that an attacker watching sporadic chats with the chatbots may reliably highlight that particular topic.

“If a government agency or internet service provider were monitoring traffic to a popular AI chatbot, they could reliably identify users asking questions about specific sensitive topics – whether that’s money laundering, political dissent, or other monitored subjects – even though all the traffic is encrypted,” Microsoft stated.

To exacerbate the situation, the researchers discovered that Whisper Leak can become a real hazard when the attacker gathers more training samples over time. OpenAI, Microsoft, Mistral, and xAI have all implemented risk mitigations in response to responsible disclosure.

“When coupled with more complex attack models and the richer characteristics available in multi-turn conversations or multiple conversations from the same user, this means a cyberattacker with patience and resources could achieve higher success rates than our initial results suggest,” it stated.

Adding a “random sequence of text of variable length” to each response is one efficient countermeasure developed by OpenAI, Microsoft, and Mistral. This masks the length of each token, making the side channel useless.

Additionally, Microsoft advises users who are worried about their privacy when interacting with AI providers to use non-streaming models of LLMs, avoid discussing extremely sensitive topics when using untrusted networks, use a VPN for an additional layer of security, and move to providers that have put mitigations in place.

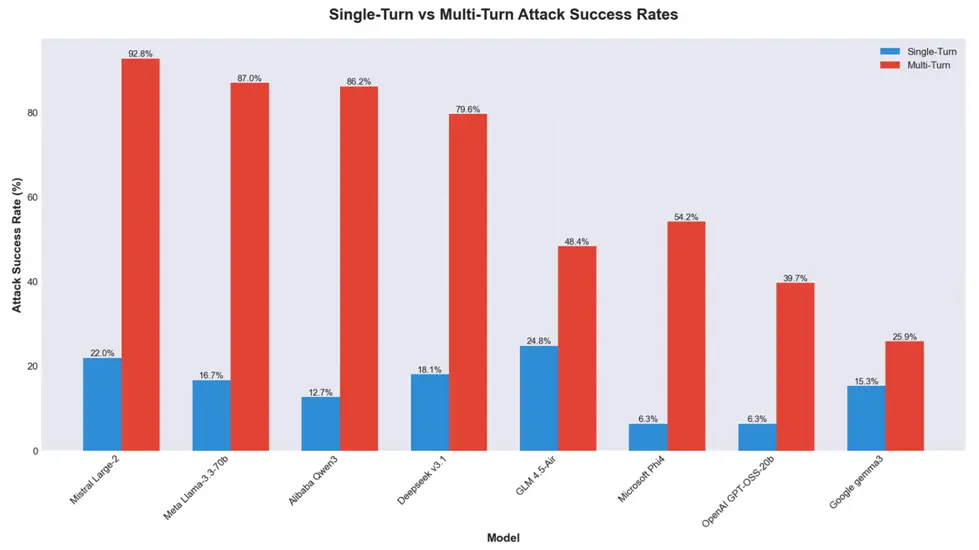

Eight open-weight LLMs from Alibaba (Qwen3-32B), DeepSeek (v3.1), Google (Gemma 3-1B-IT), Meta (Llama 3.3-70B-Instruct), Microsoft (Phi-4), Mistral (Large-2 aka Large-Instruct-2047), OpenAI (GPT-OSS-20b), and Zhipu AI (GLM 4.5-Air) were found to be extremely vulnerable to adversarial manipulation, particularly when it comes to multi-turn attacks.

In an accompanying study, Cisco AI Defense researchers Amy Chang, Nicholas Conley, Harish Santhanalakshmi Ganesan, and Adam Swanda stated, “These results underscore a systemic inability of current open-weight models to maintain safety guardrails across extended interactions.”

“We assess that alignment strategies and lab priorities significantly influence resilience: capability-focused models such as Llama 3.3 and Qwen 3 demonstrate higher multi-turn susceptibility, whereas safety-oriented designs such as Google Gemma 3 exhibit more balanced performance.”

Since OpenAI ChatGPT’s public debut in November 2022, a growing body of research has revealed fundamental security flaws in LLMs and AI chatbots. These findings demonstrate that organizations adopting open-source models may face operational risks in the absence of additional security guardrails.

Because of this, it is imperative that developers implement strict system prompts that are in line with defined use cases, fine-tune open-weight models to make them more resilient to jailbreaks and other attacks, perform regular AI red-teaming assessments, and enforce sufficient security controls when integrating such capabilities into their workflows.

About The Author:

Yogesh Naager is a content marketer who specializes in the cybersecurity and B2B space. Besides writing for the News4Hackers blogs, he also writes for brands including Craw Security, Bytecode Security, and NASSCOM.